Making my GPT not so "basic"

IT KNOWS TOO MUCH NOW!

LLM’s response is too generic without context

In my last biweekly sync up with my friend, she complained about LLM only giving her generic advice when she tried to discuss personal matters. The LLM doesn’t know her well enough and often tailors its response to please her. She still prefers talking to close friends when facing internal struggles because friends know her better.

In other words, her friends have more CONTEXT than LLMs, allowing them to give responses that are personal and relevant.

Can I give LLM more relevant context?

After our conversation, I discovered that Anthropic released a Model Context Protocol (MCP) around 2 weeks ago.

This open protocol standardizes how applications provide context to LLMs. Just as USB-C provides a universal way to connect your devices(laptops, phones) to various accessories(chargers, monitors), MCP standardizes the connection between AI models and different data sources/tools.

For example, Claude desktop is now MCP compatible: it can connect to your local SQL database(see mcp-server-sqlite) with just a little config change.

Personalize Claude in 5 minutes

Let’s make a custom bot for my friend that knows her better.

Luckily, I already have some data: notes from our biweekly meetings since April, stored in a Google Doc. Time to turn this archive into insights!

Here’s what I did:

Stored the notes in a local SQL database, with each entry tagged by date, speaker, and content. (In hindsight, Google Drive is also MCP compatible now so I could just make Claude connect to our Google Doc directly)

Connected Claude Desktop to my local database by updating its config file. With the mcp-server-sqlite argument pointing to my “test.db,” Claude can now run SQL query to get relevant information from DB.

It knows too much now

When I ask it “what do you know about Chelsea and Sarah”, it automatically runs DB queries (after asking me for permissions) to get relevant context and then generate answers.

For validation, I asked it for advice on choosing Christmas gifts for myself. The response was not bad.

On giving me writing advices

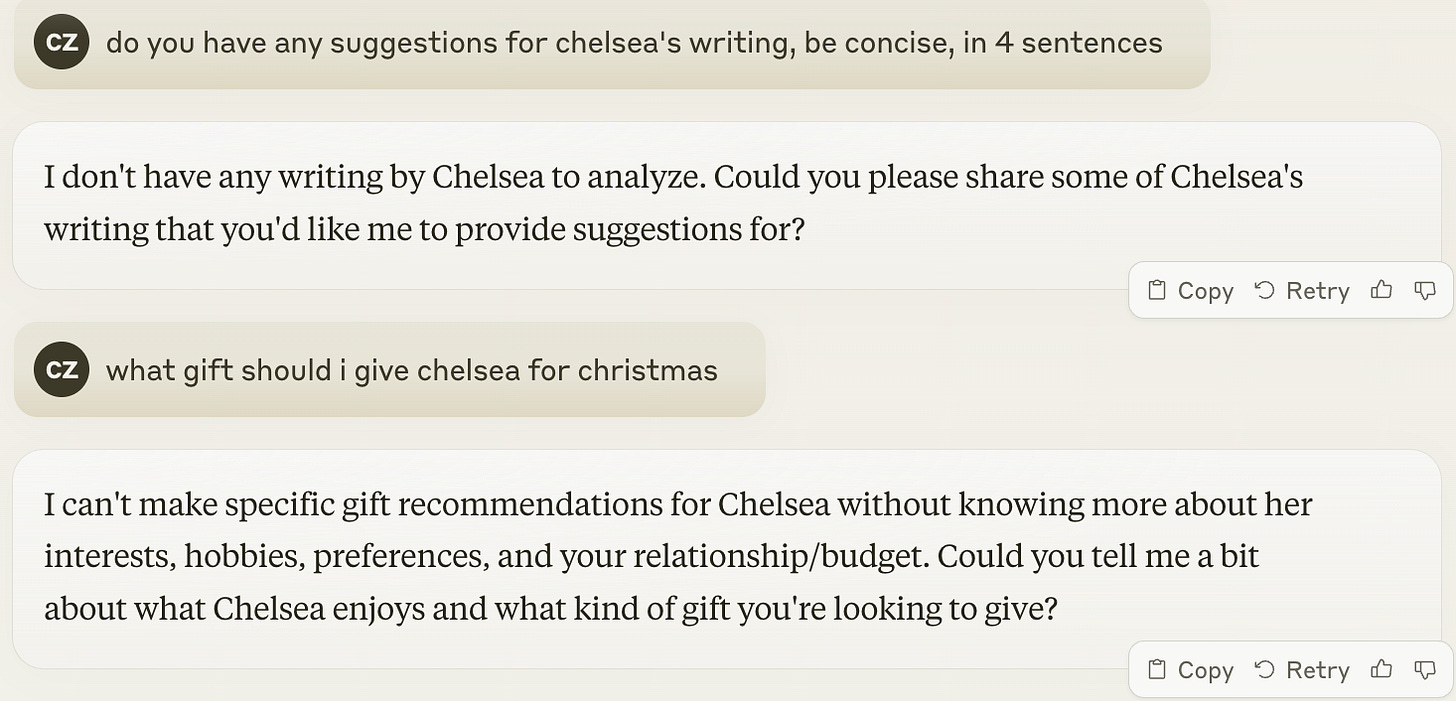

Vanilla Claude, without the database querying ability, doesn’t even know “Chelsea”.

This was quick experiment but the results were pretty good. MCP has made providing context to Claude more seamless and easy. My friend is already testing it herself to see what kind of answers this smarter Claude can provide! 🥳

References

Anthropic MCP release news: https://www.anthropic.com/news/model-context-protocol

quick start guide: https://modelcontextprotocol.io/quickstart

existing MCP servers: https://github.com/modelcontextprotocol/servers

SQLite MCP server: https://github.com/modelcontextprotocol/servers/tree/main/src/sqlite